AI Video Breakdown: “Meetings of Love” Video FAQs

We had a little fun (okay, too much fun) making our 2026 New Year’s greeting with “Meetings of Love” and its accompanying video. Here it is, if you missed it:

Since its release, our friends and colleagues have been asking:

- How we made the song and accompanying music video

- How much was AI vs. human

- What the experience was like

So, we put together these FAQs for the questions we received most, or that we think will generate the most helpful answers. We hope you find it useful.

Note: AI tools are advancing rapidly. This info was current as of December 2025, when we put the song and video together.

Q: Why a song and music video?

We send humorous time-capsule messages at the holidays, poking fun at the absurdities and our shared experiences of the moment. We sometimes send them in December for traditional holiday timing, and sometimes as New Year greetings instead. A few examples:

This year, while brainstorming card ideas, Arien, our tech and AI ops lead, suggested that we create a song in Suno for this year’s message. (He introduced the idea with a song that trolled the rest of the team and positioned himself as a hero, but we’ll let that go here.) We loved the idea and decided to dig into current sentiments that virtually everyone we know is venting:

- AI “productivity”

- Meeting sprawl

- How the former is creating more of the latter

When song production was complete, Khali (our senior partner), said we should make a music video instead of just putting a track on Spotify. The team agreed, and we dove in. And, we gave ourselves a fun handicap to keep it all on theme… we’d use AI tools and all their magic and mayhem as much as possible.

Also, we’re legitimate nerds. Not in the fashionable way, but the “Oh my god, you people are nerds!” way. This project gave us an excuse to press some models against the wall to see how they performed.

Q: Why the cartoon style for the band/team scenes?

Since the song is light-hearted, we thought a cartoon style would work well. Plus, we have team members who can use animation puppet tools, and we wanted to see how well current AI tools animate sections compared to that. We were particularly interested in syncing with the soundtrack and whether we could get existing AI to lip-sync well. The same went for the instruments (especially the drums). The video was as much a lab experiment as a project.

Q: Who’s who in the video?

In order of appearance (cartoon versions):

- Arien (AI, Coding and IT Director) – piano

- Israel (Art Director) – lead vocal

- Khali (Senior Partner, Content Chief) – lead vocal

- Keith (Digital Marketing Manager) – bass guitar, backing vocals

- Seth (Marketing Ops Manager) – guitar, backing vocals

- Casey (Managing Partner, Chief AI and Strategy Officer) – drums

- Dylan (Strategic Account Director) – triangle

Q: Did your team write the song?

The lyrics? Yes.

The music? “Orchestrating” through Suno (by Arien, a musician) is probably the right way to describe it. We’re not sure how to apportion it properly. If you’ve used a tool like this and have a musical background, you probably know what we mean. It’s you, but AI is so entrenched, and speeds you up and rounds you out so much, it’s hard to parse.

Q: Did your team perform the song?

No. Many in our team can sing and play instruments, but this was an AI project. “Meetings of Love” is performed entirely by Suno, save the final triangle strike at the very end of the video. Israel, depicted as the lead singer in the video, has joked that he needs to learn the song so he can sing it when he’s asked at trade shows. (He’s a vocal coach and capable tenor, so… maybe?)

Q: Are the non-cartoon portions stock footage?

Three of them are. The rest are AI-generated to fit the context/lyrics.

Q: What software did you use?

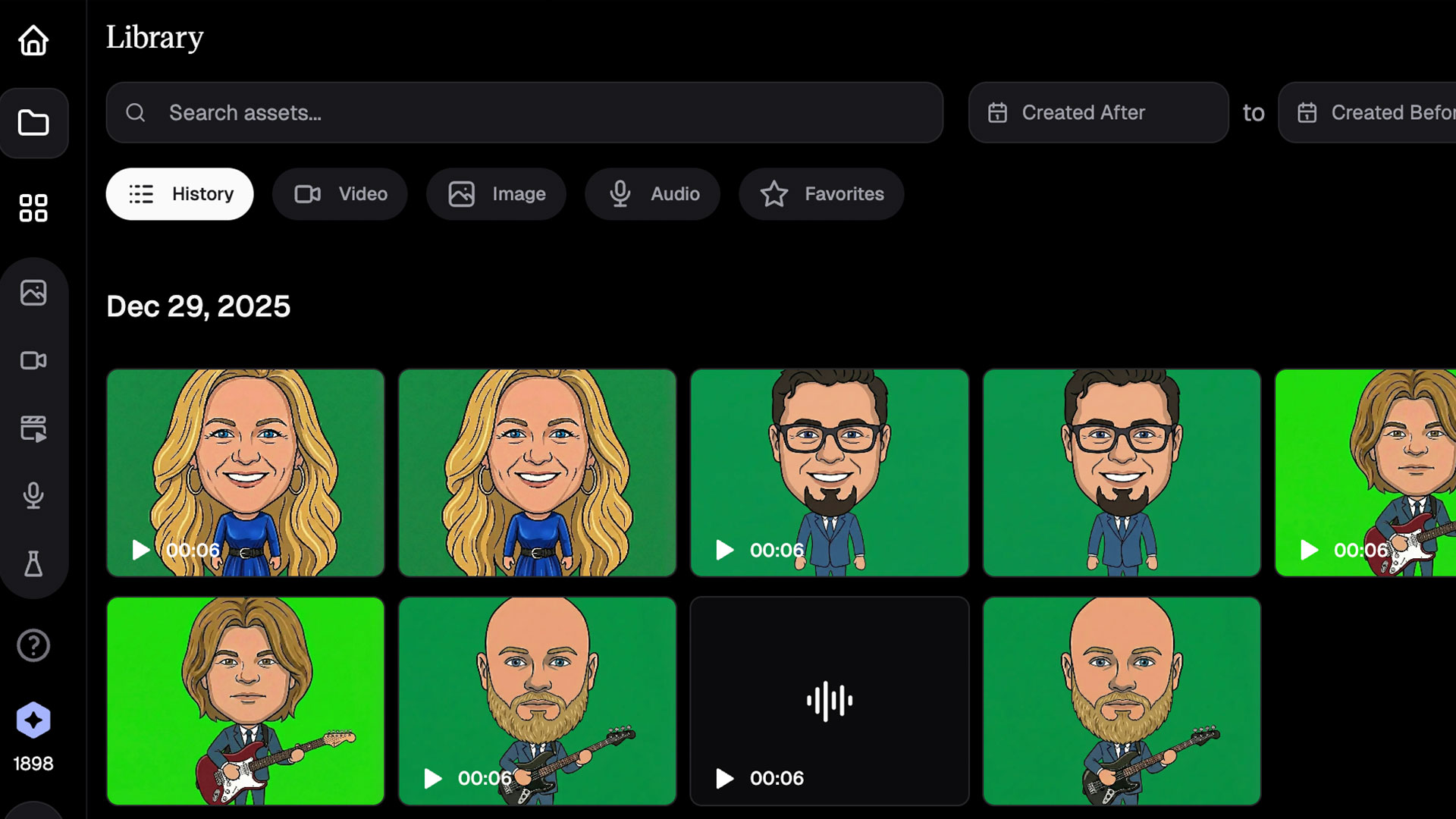

Our AI band members in Hedra, ready for chroma keying (green-screen compositing).

Here’s the list of tools that made the cut (we experimented with more, but these were used in the final). AI versions are noted where appropriate.

- Adobe After Effects

- Adobe Photoshop

- Adobe Premiere Pro

- Anthropic Claude (Opus 4.5)

- Apple Logic Pro

- Flow from Google Labs

- Google Gemini (3.1 quality and 3.1 fast)

- Hedra (Character 3)

- Microsoft Outlook

- Microsoft Word

- Nano Banana Pro from Google

- OpenAI ChatGPT (5.1 and 5.2)

- Runway ML (4.5)

- Suno (5)

- Zoom

Q: Where are we with AI for projects like this? Could you create a song and video with no technical or musical skills?

We’ve debated this internally. It depends on the output you need. Let’s use this project as an example to make it tangible, given current AI capabilities.

Song lyrics

The lyrics are almost entirely written by us. Whether you think AI could make some great lyrics for a project you’re working on or not, we’ll leave up to you. The eye of the beholder and all that…

Music and production

For the production, it’d be hard to overstate the time saved with Suno. Yes, we had a specific narrative we pursued, but Suno can produce professional-sounding songs with simple prompts. Professional songwriters are using Suno throughout the industry, and we believe that over time, it’ll empower people without musical backgrounds to tap into that side of their creativity. Taking the time to learn a little about music theory and structure could help, of course. But we also suspect that those who can naturally hold a tune or have other musical sensibilities will take to it like ducks to water (they can literally hum their ideas to life).

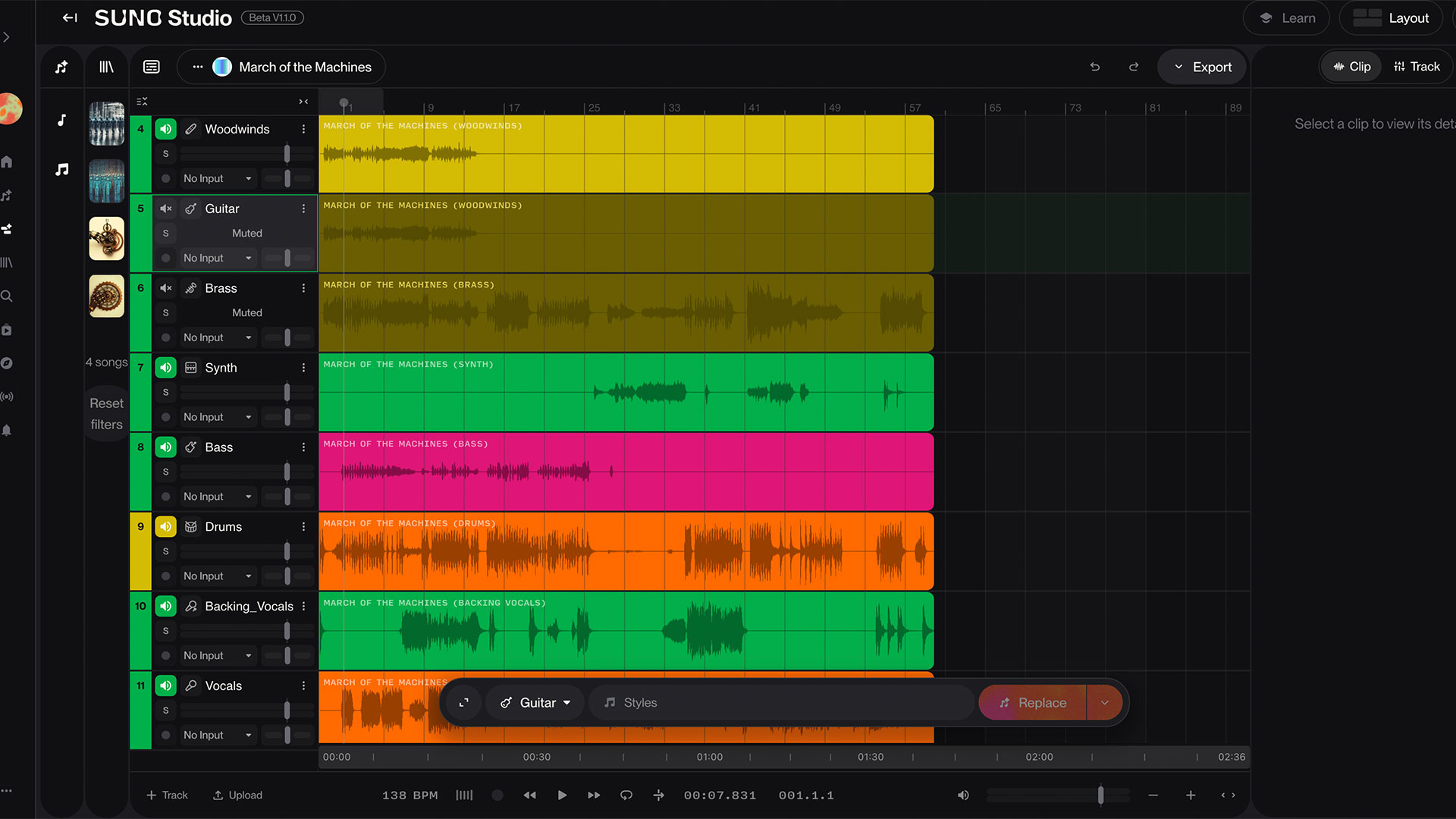

The biggest limitation at this point is that, in the Suno Studio (if you use the premiere version to get access to the DAW-style studio tools), the process of getting stems is buggy because it presently creates all the music at once and then tries to recreate instrument-level portions for you on demand if you want them. Given the software’s growing importance in the pro music world, we suspect this will be a short-lived limitation.

An example of Suno Studio’s AI-powered cloud DAW (available in premiere plan subscriptions). Stem extraction was a little buggy at production time, but we think it will rapidly improve.

The bottom line: We’re a musical lot at BuzzTheory. That’s not unusual for creative teams. But with emerging AI tools, you don’t have to be. You can take an idea to Suno and create a professional-sounding, catchy song right now, even if you’ve never picked up an instrument or sung a word in your life.

Video production

We have considerable video production experience across our team and could have used puppet tools in Adobe (After Effects or even Premiere Pro at this point) to bring the band characters to life. Instead, because AI is central to the entire project, we opted to use AI as much as possible and let the chips fall where they would.

As with most AI-oriented experiences, there were serendipitous and frustrating moments throughout. However, the bottom line was that production was significantly faster, even with experiments and roadblocks, as we pushed the models against their current limitations.

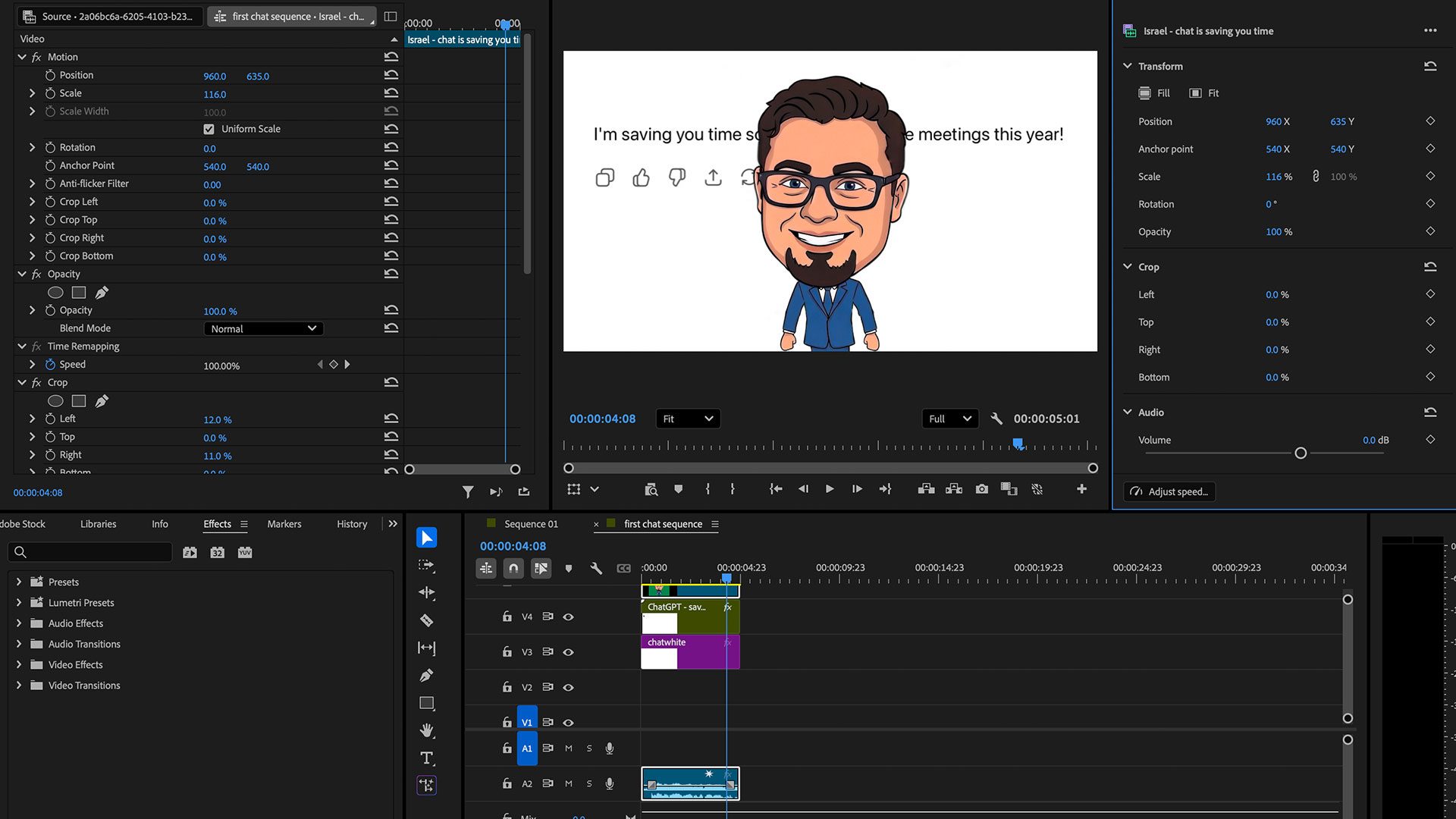

We still used some traditional production tools throughout, like Premiere Pro, though virtually all software tools are becoming AI-enhanced.

Layering the background, ChatGPT interface and avatar, and aligning the audio track in Premiere Pro.

We also used a couple of stock clips. We knew one clip would save us a lot of time, and we used another to test the current ability of AI to create a consistent extension of existing footage from an opposite POV.

Vision vs. precision

Obviously, you can create an AI video today with no production skills. And you don’t need extensive prompting knowledge either at this point (in fact, in some cases, less is becoming more). If you go back 12-18 months, we couldn’t have done this video without characters having Lego® teeth and eight fingers on each hand. In other words, it’s moving quickly, and software skills are becoming less vital.

In terms of where we are today and what creatives might expect when taking on an endeavor like this, perhaps the best measure is how much of your expected vision survives versus how much you had to change to accommodate AI limitations. We tried many tools with experienced production and AI tool developers and prompt experts to exhaust our options. The final is perhaps 70/30 (what we aimed for vs. where we landed), with some strategic timing and a few animation “cheats” patching sections when there was no alternative.

Flexibility vs. speed

But (and this is a big “but”) we aimed for a particular mood and pace, and we wanted images that all our friends in the industry would relate to. These clips are stock-styled by design, but they’re highly customized and generated for your consumption. We wanted a video that:

- Gains momentum and energy along with the piece.

- Captures the frenetic pace of the life we are all leading together in the present.

- Recognizes our shared experiences and humanity.

We developed all of this methodically, section by section. Is the final product exactly what we envisioned? No. But it’s not “not the vision” either. (Sounds a lot like working with AI writ large in the moment, doesn’t it?)

If your project is flexible, and you’re more open to following AI down imagery rabbit holes, you could save time and end up with something reasonably entertaining without suffering through extensive tweaking and fixing. The more flexibility you have, the less frustrated you’ll be (which is why AI video at this point is more prominent in personal than company projects with particular goals and brand considerations).

Don’t be distracted from your audience

However, and forgive us for the momentary self-reference, as a team that spends most of our waking hours helping companies generate revenue, we can’t overstate the importance of originality, focus and relevance. The more relevant you can make an original narrative, the more effective you’ll be both with and without AI. Keep your audience and the challenges they face (including AI encroachment into everything they’re doing, too) as your north star, and you’ll come out in good shape.

Q: How fast can you make an AI-powered song and music video like “Meetings of Love?”

“Like this” are the operative words. You could create “a” song and “a” music video in an afternoon. (Think: Feed a song theme to Suno and then use AI to develop general background imagery.)

Production time for a project like “Meetings of Love,” with custom lyrics and song structure and a planned video, depends tremendously on what you want and how willing you are to compromise when AI acts like, well, AI. You get considerably faster when you realize model limitations and how to adapt to them.

We probably couldn’t speed up the song creation much faster than we did. But on the video front, if we had to do the same video again today, we’d probably do it in a third to half the time it took the first time, now that we know which AI paths are dead ends (as of now).

Note: “Human in the loop” isn’t good enough for something like this. You’ll be directing everything, down to tiny detail, if you want a good outcome. You’ll still need good content people on it.

Q: What were the biggest surprises you encountered during the project?

Let’s list our top five:

- AI randomness: As you might imagine, there were some crazy AI video generation moments. We expected some craziness from time to time, and it’s perplexing when an AI tool that’s been consistently reliable jumps the shark. Even with our agency’s AI experience, we encountered some shockers. Like the gem above, which turned up randomly amid normal variations.

- AI limitations: Sometimes, we couldn’t get what we wanted from any of the models, even when the concept was relatively simple from a creator’s point of view.

- AI abilities: On the other hand, we had some serendipitous moments with AI. Examples included a timelapse of an endless meeting as the sun rises and sets in the background, or a group of businesspeople singing, “Oh oh oh ohhh,” and ducking down and up in sync, which we were able to tweak to the song using some time cheats and alignments in Premiere Pro.

- Prompt variability: Depending on the need and the interface, we sometimes got better results with highly detailed prompts, while others barely qualified as prompts at all. You get good at understanding when to start a new chat/prompt exchange, when to go one element at a time (like creating our stage: we started with the framework, then added lights, then speakers), and when to pause. In some moments, when the model is behaving squirrely, and you can’t steer it back on track, come back in a few hours or the next day.

- Production time (and… production time!): We were impressed by both the speed of production in some cases and how long it took in others. As AI improves, the slow parts will become faster, too. For now, it’ll be a lot more uneven in portions than you think, and you’ll be surprised by what holds you up. You’ll nail a complex animation fast and think, “I’ve cracked this prompting code,” and then, during a simple animation, AI will seem to say, “Now, what was it that you thought you cracked?”